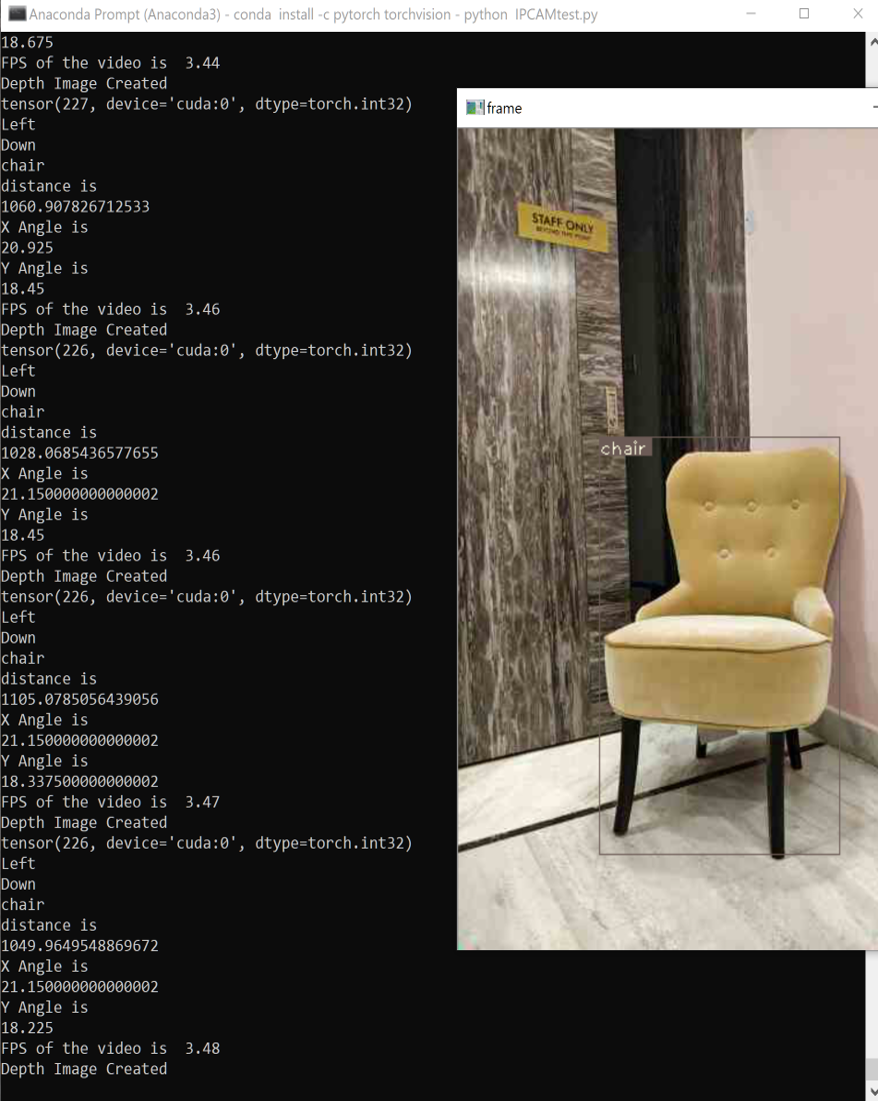

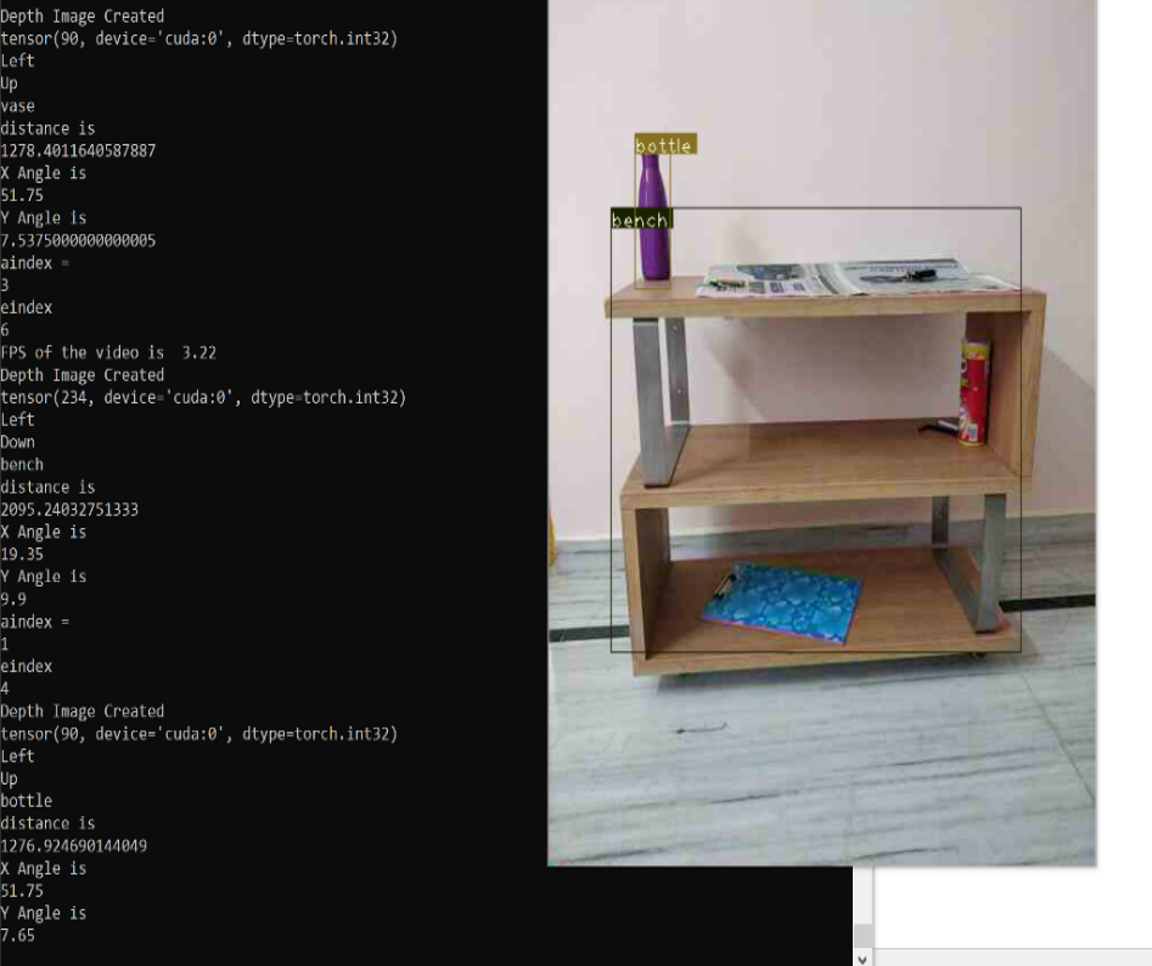

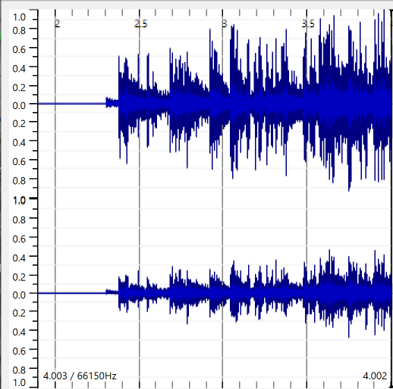

Many visually impaired people stay at home to avoid the challenges and difficulties in navigating from one place to another. Navigating in indoor areas like hospitals, mall is very difficult due to many external factors like noise, crowd, smell etc. Here, we aimed to develop a real time spatial audio and generating software to assist visually impaired people in navigation. YOLO is capable of object detection with good accuracy and helps in identifying different classes of objects and the locations of the detected objects in the frame. Monocular depth estimation helps in calculating the disparity map from a single image. With simple linear interpolation the information about azimuth and elevation angles can be obtained. HRTFs have the capability to convert a mono audio source into a spatial audio using the azimuth and elevation angles. The generated spatial audio’s intensity is varied according to the distance of the detected object. The depth of the detected object was calculated with an error in range of 40-60 cm. Overall we can conclude that the real time generated spatial audio was able to clearly specify the object orientation in 2d space and helpful in navigation of visually impaired people.

Computer Vision and Image Processing for Commercial use Appli...

Computer Vision and Image Processing for Commercial use Appli...

Emotion Recognition using Deep Neural Network with Vectorized...

Emotion Recognition using Deep Neural Network with Vectorized...