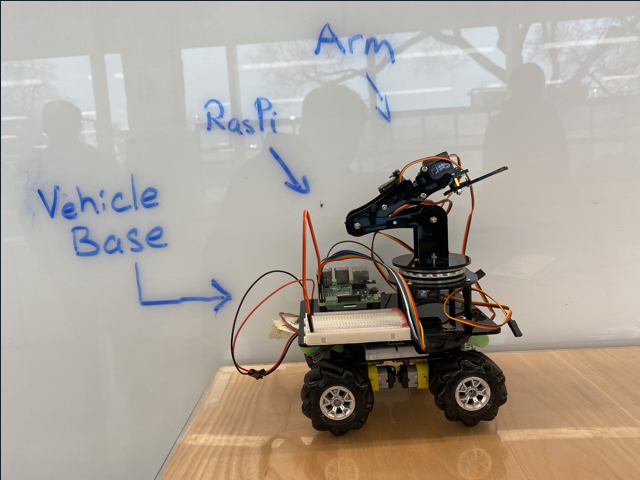

Robotics and gesture-control technology are two rising fields of innovation, enjoying both academic and commercial spotlights. Robots used for remote operation and environmental manipulation occupy an important niche in markets and industries looking for varying levels of mechanical automation. However, a majority of such designs rely on analog-based control mechanisms. In this project, we aim to replace traditional control mechanisms with an intuitive gesture-based control mechanism through a modification on the popular project: the vehicular robotic arm. We use gesture sensors to gather user’s hand movement data through the controllers and map these gestures to unique robot movements (locomotion or arm movements) using an inverse kinematics algorithm to make a set of gesture-based commands for remote control, which we anticipate to be the core challenges to the endeavor. Through the added camera module, users get visual feedback for better understanding of the terrain and robot positioning to aid in maneuvering the robot remotely. The end result expected is a fully gesture-operated robotic arm capable of vehicular locomotion that can be remotely, accurately, and easily operated by users of varying degrees of experience. Our work highlights the potential for gesture mimicry and control mechanisms in the robotics industry for both academic and commercial applications. We believe that replacing the analog experience with a smoother gesture experience will help gesture-based robotics to proliferate into public use.

Portable Wireless Oscilloscope

Portable Wireless Oscilloscope